You don't need AI agent frameworks

Building an on-call AI workflow using AI design patterns

Every week a new framework pops up: LangChain, LangGraph, Google ADK, OpenAI Agents SDK, CrewAI, PydanticAI. They promise speed, but often leave developers buried under abstractions that make debugging harder.

Which framework should I use? Which is better? Which is more "production ready?". It can feel like an impossible and confusing choice.

At the core, LLMs are just stateless functions. The real challenge is knowing when frameworks help, and when they just add noise.

Having spent the last few years building cloud systems that handle trillions of calls, I've learned one crucial lesson: simplicity scales, complexity fails. When systems break, you want code you can understand and debug quickly.

The same principle applies to AI systems. To show this in practice, let's walk through a system my team is building: an on-call triage assistant that classifies incidents, gathers context, and recommends actions. Saving 10-15 minutes per incident translates to days of tedious work saved per week when you're dealing with hundreds of alerts.

We'll design and build this without any frameworks using just boring Python code, then discuss when frameworks actually make sense.

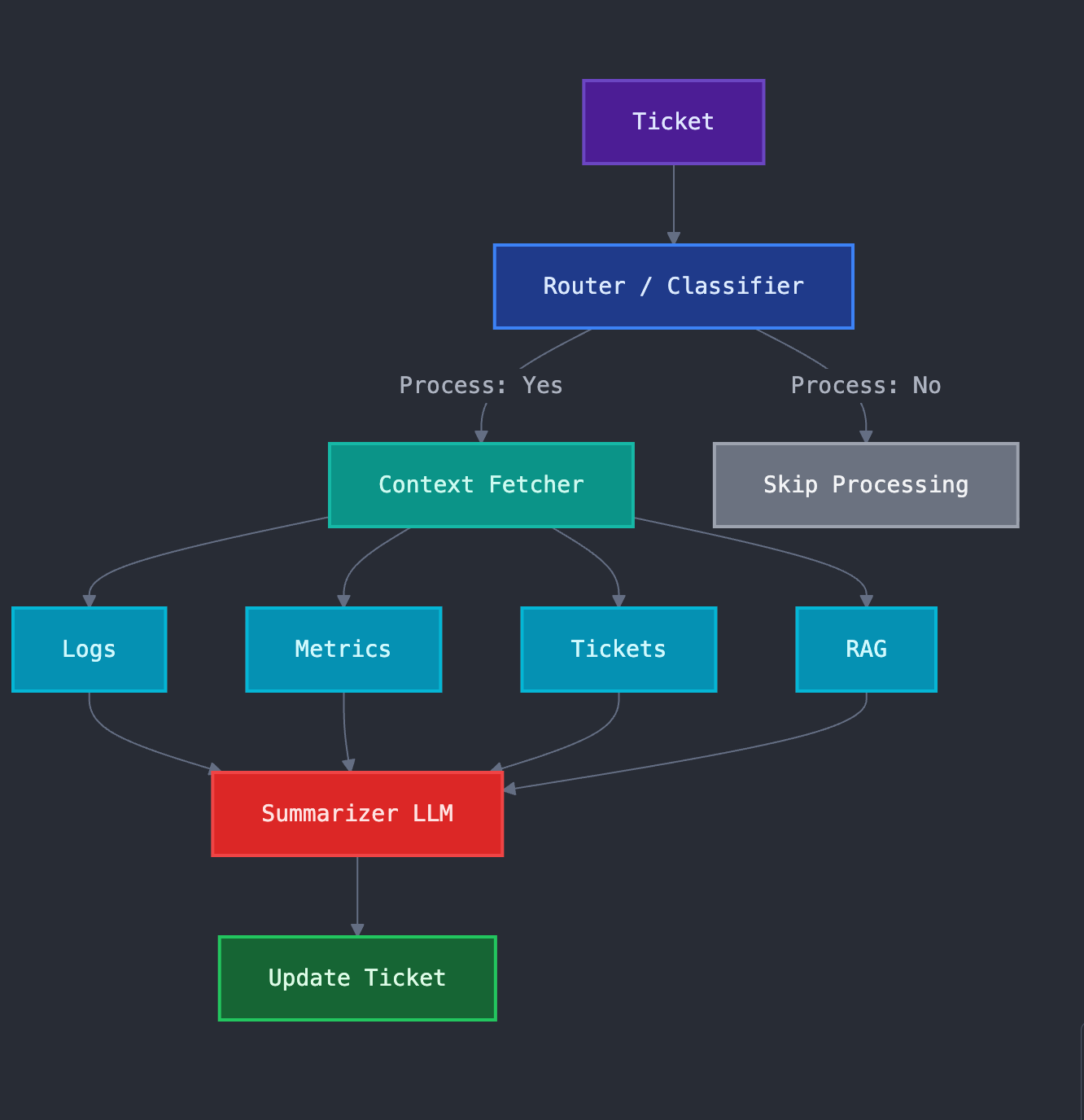

Here's the system we're building:

The Framework Reality Check

As Anthropic's engineering team puts it in their recent guide:

"Frameworks make it easy to get started by simplifying standard low-level tasks like calling LLMs, defining and parsing tools, and chaining calls together. However, they often create extra layers of abstraction that can obscure the underlying prompts and responses, making them harder to debug. They can also make it tempting to add complexity when a simpler setup would suffice."

This captures the key tension: frameworks solve real problems, but they also introduce real costs. The question isn't whether frameworks are good or bad, it's whether their benefits outweigh their costs for your specific use case.

The Foundation: Steps and State

Think of your AI workflows and agents as a sequence of steps. Each step takes in state and outputs updated state. Each step should:

Do one thing well (UNIX philosophy)

Have explicit inputs/outputs (no hidden dependencies)

Be inspectable (you can test/debug each step)

That's it. Everything else builds on this idea.

Core Design Patterns

1. Routing (Strategy Pattern)

Different inputs need different workflows. A system alert needs log analysis. A customer question needs documentation lookup (RAG).

def handle_ticket(ticket):

category = classify_ticket(ticket) # LLM classification

if category == "system_alert":

return analyze_system_alert(ticket)

elif category == "customer_question":

return answer_customer_question(ticket)

else:

return escalate_to_human(ticket)Why it works: Clear, testable, easy to extend.

Production tip: Collect 100+ labeled tickets to benchmark classification accuracy.

2. Parallelization

When gathering context, sequential calls waste time. I learned this building an AI video app where each image generation took >30s. Running 10 of them sequentially = 5+ minutes. In parallel = 30 seconds. 10x speedup.

async def gather_context(ticket):

tasks = [

search_logs(ticket),

find_runbooks(ticket),

find_similar_tickets(ticket)

]

results = await asyncio.gather(*tasks)

return {

"logs": results[0],

"runbooks": results[1],

"similar": results[2]

}Why it works: Saves time calling (very) slow LLM APIs.

3. Prompt Chaining

An obvious challenge of building anything with AI is to avoid cramming multiple instructions into a prompt and to avoid overloading the context window.

Split your problem into smaller steps that are easier to test ("do one thing well"). As an example we might summarise a huge amount of context and then use the compressed context to decide on a recommendation.

def analyze_incident(ticket, context):

# Step 1: Summarize raw data

summary = llm_call(f"Summarize key findings: {context}")

# Step 2: Use summary for recommendations

recommendations = llm_call(f"Based on '{ticket.summary}' and findings '{summary}', recommend next action")

return recommendationsWhy it works: Clearer outputs, easier debugging.

Production tip: Build "golden datasets" for each step to evaluate the results against an ideal output.

When Frameworks Actually Make Sense

After building systems from scratch, you will have a clearer view of when frameworks add genuine value:

Frameworks Excel When You Need:

Built in tool calling and structured outputs. Writing tool calling and structured output parsers can be time consuming. It often makes sense to use a framework for these “augmented LLM” basics.

Specific things that frameworks have already. Some frameworks have out of the box features that would be time consuming to build. Frameworks like LangGraph for example have a built in way of describing complex workflows as Graphs.

Built-in observability and monitoring. Production AI systems generate massive amounts of telemetry. Good frameworks provide structured logging, cost tracking, and performance metrics out of the box.

When to Stay Framework-Free:

Your use case is straightforward. Most AI applications are simpler than they appear. Classification, summarization, and basic Q&A don't need complex orchestration.

You need tight control over costs and performance. Frameworks often make multiple API calls behind the scenes. When every token counts, manual control wins.

You're still learning how LLMs work. Understanding the fundamentals before adding abstraction layers makes you a better AI engineer.

Your team prioritizes debuggability. If your on-call rotation needs to debug AI issues at 3 AM, simpler code wins.

A Pragmatic Framework Decision Tree

Evaluate frameworks against specific pain. Don't adopt a framework for everything, adopt it for the specific problems it solves better than your code (e.g using PydanticAI for structured output and tool calling or LangGraph for workflows).

Keep the core logic framework-free. Even if you use frameworks for orchestration, keep your business logic in plain functions that can be tested independently.

Conclusion

We built a production-ready triage agent using three patterns: routing, parallelization, and prompt chaining. No framework required.

Start simple. Build your first version with plain code. When you hit specific limitations, evaluate frameworks as solutions to those problems, not as silver bullets.

Most AI systems are just normal software systems. The same principles that guide good software apply here: clarity over cleverness, simplicity over sophistication, understanding over abstraction.