How I made my AI application 3x faster using AsyncOpenAI.

How to speed up your slow AI applications.

If you work with GenAI for any length of time, you'll realize one thing: AI models can be (really, really) slow.

I was recently working on an AI video generator that has three primary steps:

Generate 10 images using OpenAI's gpt-image-1 model

Send each of these images to an image-to-video model (Kling) to generate scenes

Manually edit these clips in a video editor like Final Cut Pro

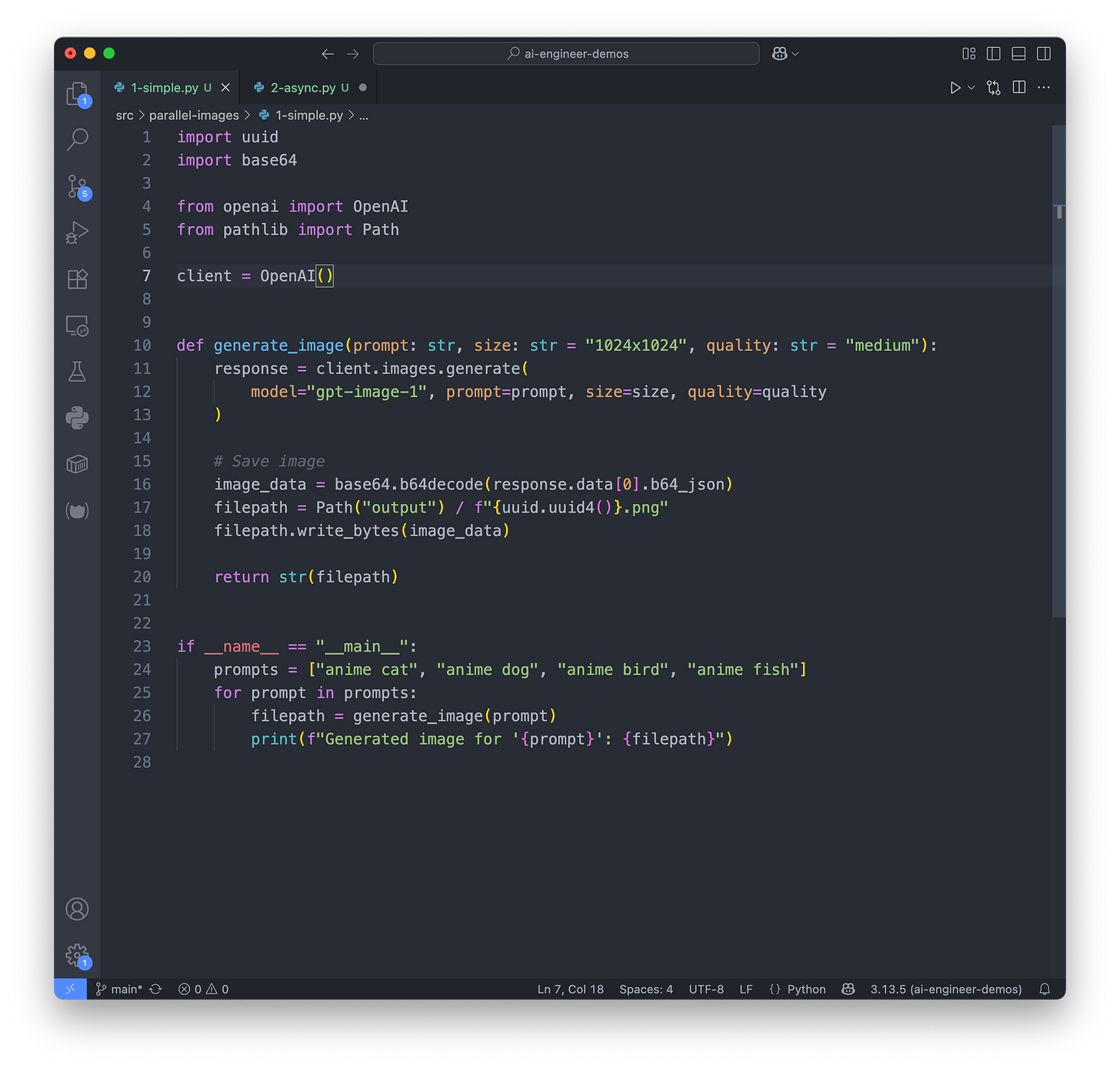

My initial naive prototype used image generation code similar to the example below. It worked but it was painfully slow (~20 seconds per image):

When generating many images sequentially, that wait time quickly adds up. Thankfully OpenAI provide an async version of their API client that can drastically speed up our code.

The Solution

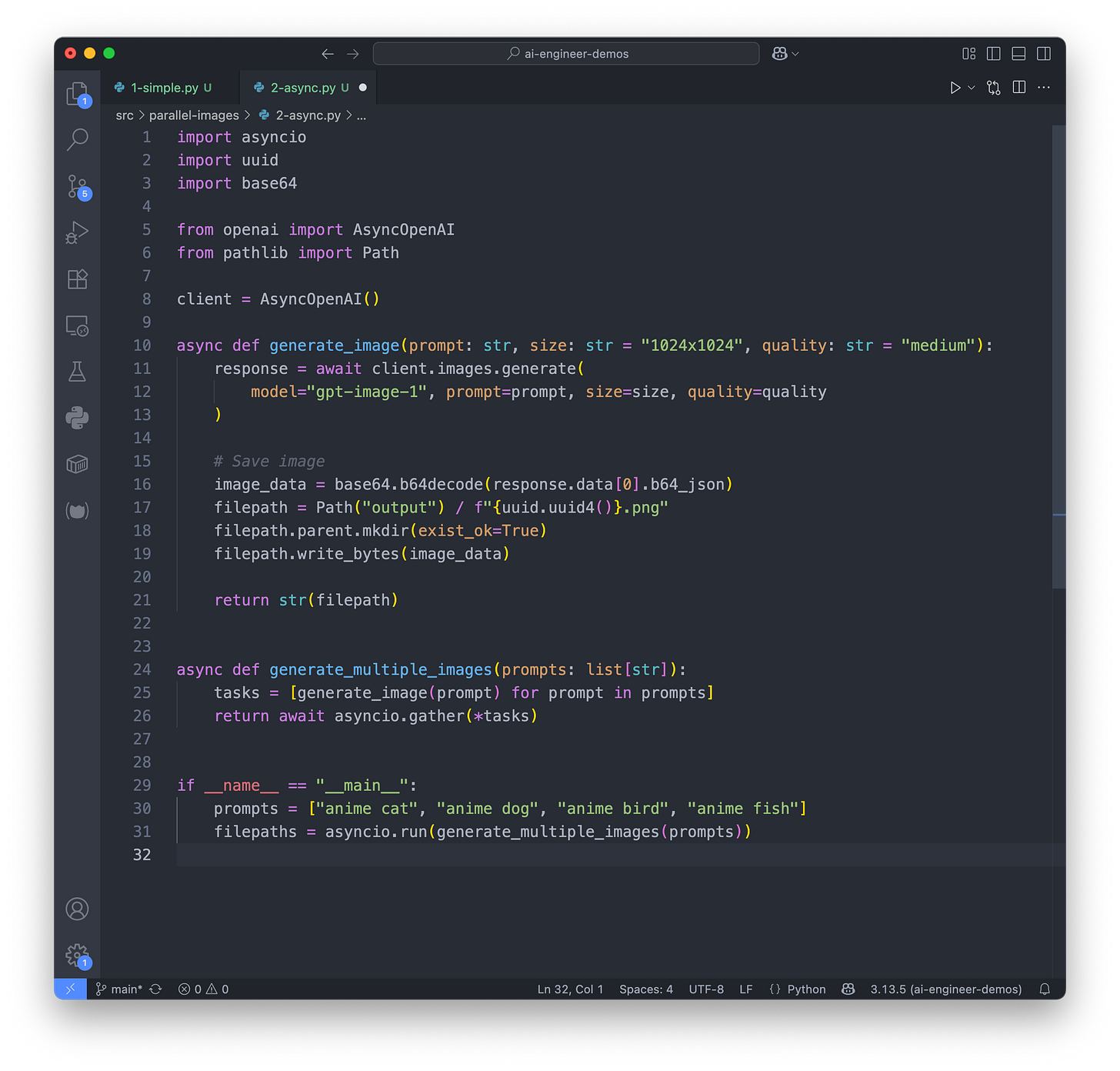

If you’re working with the OpenAI client, the quickstart examples all use the sync client. But there is a better alternative - the Async client.

from openai import AsyncOpenAI

client = AsyncOpenAI()Here's the same functionality rewritten using OpenAI's AsyncOpenAI client:

What Changed?

The async implementation is significantly faster for generating multiple images (90 vs 30s on 4 images). This is a 3x speed improvement with identical results. The async version is limited primarily by the slowest individual request, not the sum of all requests.

Other Considerations

When performing parallel operations, it’s important to consider rate limits. To work around this you might want to use a semaphore.

import aiohttp

from asyncio import Semaphore

# Limit concurrent requests

semaphore = Semaphore(5) # Max 5 concurrent requests

async def generate_image_rate_limited(prompt: str):

async with semaphore:

return await generate_image(prompt)

Summary

The AsyncOpenAI client is a game-changer when you need to make multiple API calls. This async pattern works for any OpenAI API endpoint:

Use AsyncOpenAI when:

Generating multiple images/completions in batch

Building web applications that need to stay responsive

Processing user requests that involve multiple API calls

Stick with the regular OpenAI client when:

Making single, one-off requests

Building simple scripts where complexity isn't worth it

You can find the code samples on Github for free here.